“You’re at odds with others with much more resources.”

“If it was that good, someone smarter would’ve thought of it.”

“That’s in theory. Real life doesn’t work like that.”

“You’re not qualified enough.”

Author: Kelvin Ang

Ang Kah Min, Kelvin

Singapore

NUS Computer Engineering

Shopee Engineering Infra

Marketplace SRE

You can gauge a person’s ability to succeed…

…from the way the person responds to setbacks.

If my attention span is not 5 ****ing minutes,…

…maybe I would have accomplished something by now.

What to do with your money

Digest these tables and draw your own conclusions.

| Decade | Average Inflation Rate in Singapore | Average Inflation Rate in US |

| 1960s | 1.60% | 2.10% |

| 1970s | 6.70% | 7.10% |

| 1980s | 2.60% | 5.80% |

| 1990s | 1.80% | 2.90% |

| 2000s | 1.60% | 2.50% |

| 2010s | 2.20% | 1.80% |

| 1960-2020 | 2.40% | 3.80% |

| Asset Class | Lowest Average 1 Year Period Returns since 1960s | Lowest Average 10 Year Period Returns since 1960s | Lowest Average 20 Year Period Returns since 1960s | Lowest Average 30 Year Period Returns since 1960s |

| Stocks | -37.00% | -12.30% | 2.60% | 5.90% |

| Bonds | -8.10% | -4.30% | 0.60% | 2.00% |

| Real Estate | -26.20% | -4.80% | 1.60% | 3.40% |

| Commodities | -43.40% | -17.80% | -5.50% | -1.10% |

| Cash and Cash Equivalents | 0.10% | 0.30% | 0.90% | 1.30% |

| Mutual Funds/ETFs | -37.20% | -10.60% | 2.20% | 4.90% |

| S&P 500 (Mutual Funds/ETFs) | -43.30% | -13.90% | 5.90% | 7.10% |

| Cryptocurrencies | -83.00% | N/A | N/A | N/A |

Laptop broke. New laptop’s audio isn’t working in Linux.

Getting ASUS ROG Zephyrus M16 audio to work in Linux – had to patch + recompile BIOS, and recompile kernel with quirk.

Here it is: https://forums.linuxmint.com/viewtopic.php?p=2315415&sid=8c7e3bd57dcada226b6bf03f8479e625#p2315415

A large part of your (un)happiness in life…

…comes from who you’re comparing yourself with.

😊☹️😊☹️😊☹️😊☹️

Doctors thought that I have bipolar disorder, and my friends say that my mood is unpredictable.

But my mood is perfectly predictable – there’s even a chart for it: NYSE: SE

Easy or hard?

It’s not “I can’t do it”, but “how can I do it?”.

It’s not “I don’t have time”, but “how can I have time?”.

It’s not “I can’t afford it”, but “how can I afford it?”.

One shuts your mind; the other opens.

One gives you an excuse; the other a challenge.

One is how most think; the other, you.

There’s nothing to fear

Your entire life, everything you know, and everyone you love, is contained on the surface of an insignificant speck of dust you call “Earth”; an insignificant speck of dust floating in the vastness of the solar system, the galaxy, the supercluster, the universe, and beyond.

To fear, is to think that any of your actions matter, that your feelings matter, that you matter, in this vastness that no one can even begin to comprehend.

You are humble, and there’s nothing to fear.

There’s nothing to fear.

The Alpha Male

The most confident, competitive and bold individuals in a group of social animals are often the alphas of the dominance hierarchy. These alphas exhibit leader-like or dominant behavior and gets things done, while conversely the betas exhibit follower-like or submissive behaviors and represent the majority of the herd.

The alphas have more responsibilities, providing for the pack and protecting them from threats, while being rewarded through preferential access to resources, including food and mates.

When it comes to us Homo sapiens, it’s no surprise that about 70% of senior executives are alpha males. These individuals not only take on responsibilities others would find overwhelming, they willingly do so.

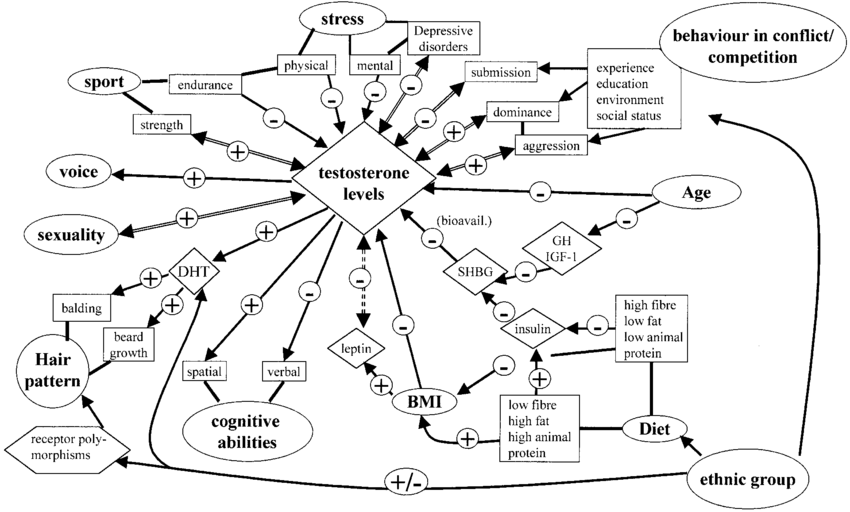

What makes alphas confident, assertive, challenge-seeking, and able to take stress, is often attributed to the magic fuel: testosterone.

|

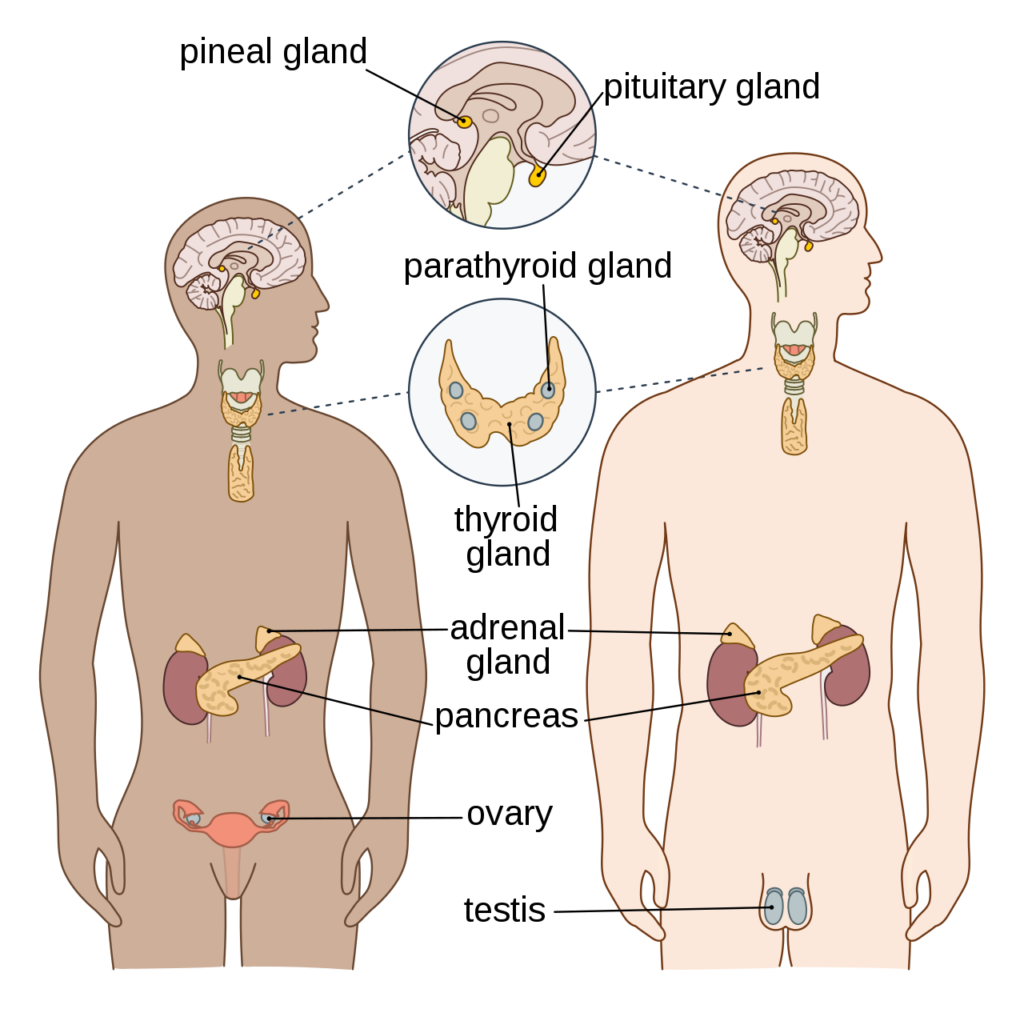

Testosterone is often, but misleadingly, called the “male hormone”, and is one of the over 50 hormones regulated by the endocrine system. Though it is mainly synthesized in the male testis, it is also produced by the female ovary. Though produced in smaller quantities in females, they are actually more sensitive to it.

(This may be copyrighted, please contact me to remove.)

Among countless benefits, testosterone is known to improve spatial cognitive abilities, improve physical and mental endurance, as well as reduce anxiety and depressive disorders. Testosterone also increases muscle mass and lowers body fat.

Though, there is a price to pay.

While being the alpha may seem great, they are often short-lived, both in terms of their hierarchical rank and life expectancy.

Having a high level of testosterone also indirectly leads to higher metabolism, requiring more food to sustain. More importantly, alphas are also typically exposed to higher levels of stress, prompting the release of Cortisol.

In the short term, cortisol signals the body to prepare to handle danger by triggering higher blood pressure, elevating metabolism of fat, protein, carbohydrates, and keeps you alert. However, it also disrupts the testosterone biosynthesis pathway.

In other words, you quickly become fat and beta. That’s when you know you’re past the tipping point.

Long term exposure to cortisol and lowered testosterone, especially with increasing age, can lead to anxiety disorders, depression, brain fog, lowered metabolism, decreased muscle mass and increased weight. This leads to a vicious cycle causing even lower testosterone levels. In other words, you quickly become fat and beta. That’s when you know you’re past the tipping point.

The higher you go, the more stressful your work, the more likely I’m talking about you. Therefore, it is important to ensure that your endocrine system is always in the right condition to maintain a healthy level of testosterone. This includes proper management, and possibly biohacking, of these key factors: Diet, Exercise, Sleep, Sex, Lifestyle, Supplementation.

Consult a real doctor.